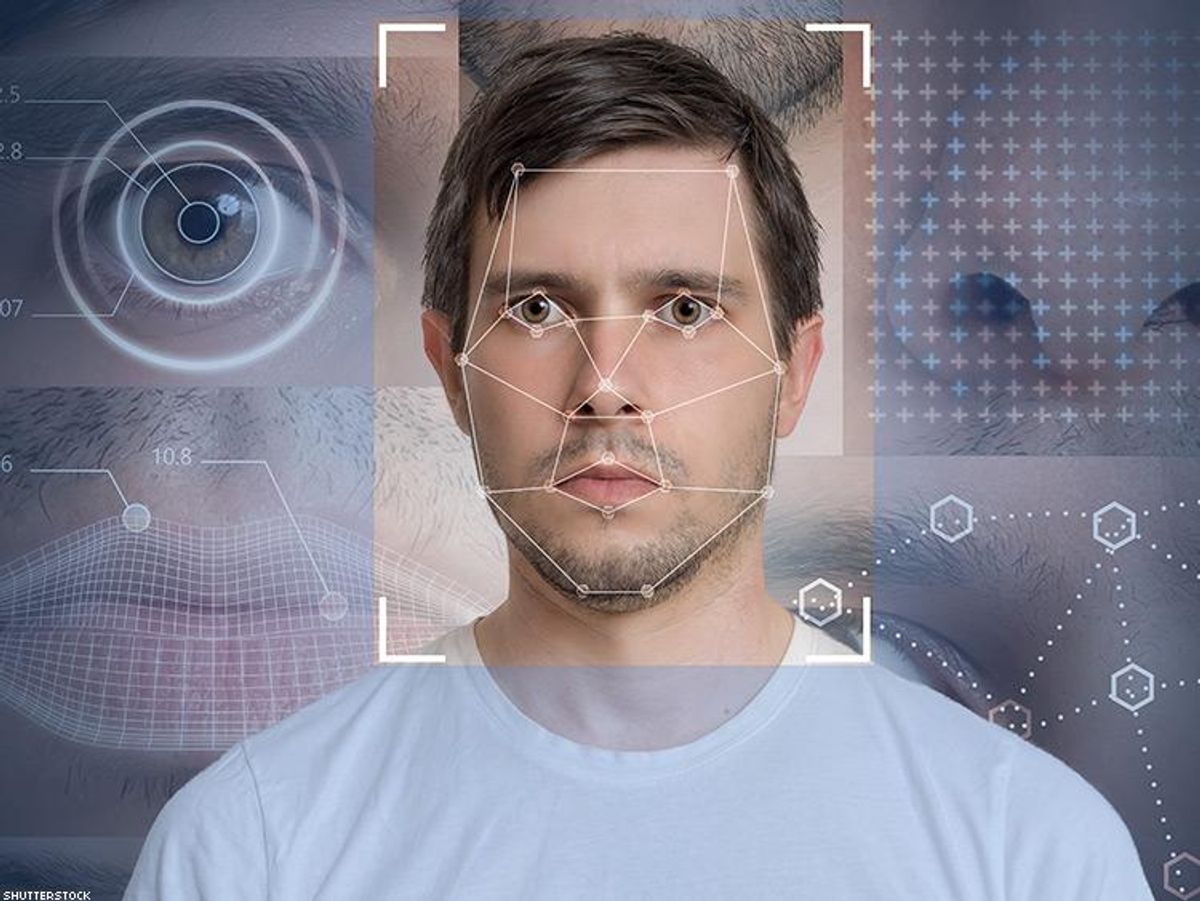

Artificial intelligence can read a person's sexual orientation with startling accuracy.

A new study from Stanford University found that a computer algorithm could, in the majority of cases, identify if a person was gay or straight just by reading one picture of his or her face.

The AI was accurate in 81 percent of cases for men and 74 percent for women. However, accuracy jumped to 91 percent and 83 percent, respectively, if given five photographs of a person. In contrast, a human trying to determine a person's sexual orientation was right 61 percent of the time for men and 54 percent of the time for women.

The algorithm examined both facial features and grooming, suggesting that genetic factors were at play that could physically distinguish queer people from their straight peers. "Consistent with the prenatal hormone theory of sexual orientation, gay men and women tended to have gender-atypical facial morphology, expression, and grooming styles," the study noted.

The study also suggested that this technology could be dangerous in the wrong hands.

"Given that companies and governments are increasingly using computer vision algorithms to detect people's intimate traits, our findings expose a threat to the privacy and safety of gay men and women," it stated.

Nick Rule, an associate professor of psychology at the University of Toronto and a "gaydar" science expert, also expressed concerns in an interview with The Guardian.

"It's certainly unsettling. Like any new tool, if it gets into the wrong hands, it can be used for ill purposes," said Rule. "If you can start profiling people based on their appearance, then identifying them and doing horrible things to them, that's really bad."

"What the authors have done here is to make a very bold statement about how powerful this can be. Now we know that we need protections," he added.

Here's our dream all-queer cast for 'The White Lotus' season 4